- 🍨 本文为🔗365天深度学习训练营 中的学习记录博客

- 🍖 原作者:K同学啊 | 接辅导、项目定制

–来自百度网盘超级会员V5的分享

目录

- 0. 总结

- 1. 数据导入及处理部分

- 2. 加载数据集

- 3.模型构建部分

- 3.1 模型构建

- 3.2 公式推导

- 4. 设置超参数(设置初始学习率,动态学习率,梯度下降优化器,损失函数)

- 4.1 设置设置初始学习率,动态学习率,梯度下降优化器,损失函数

- 4.2 动态学习率代码说明

- 5. 训练函数

- 6. 测试函数

- 7. 正式训练

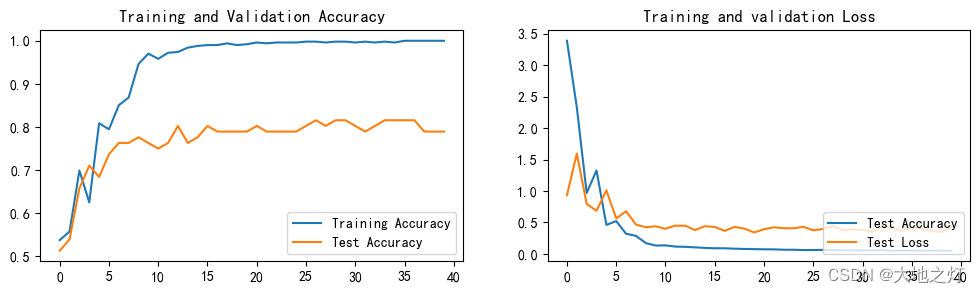

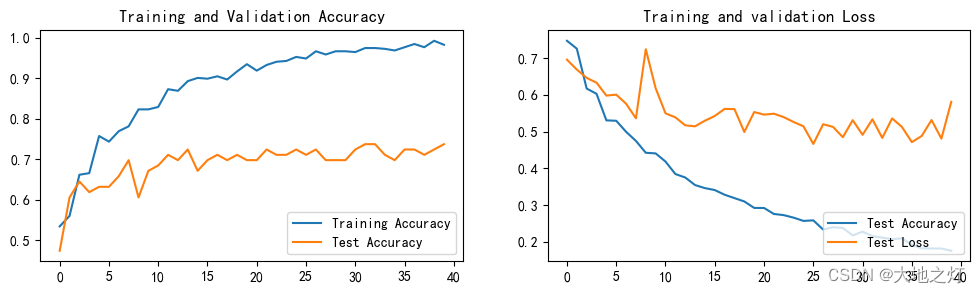

- 8. 结果与可视化

- 9. 保存并加载模型

- 10. 使用训练好的模型进行预测

- 11.不同参数模型预测

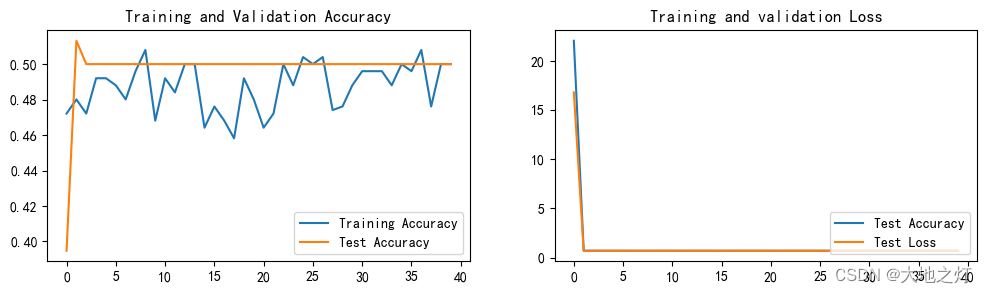

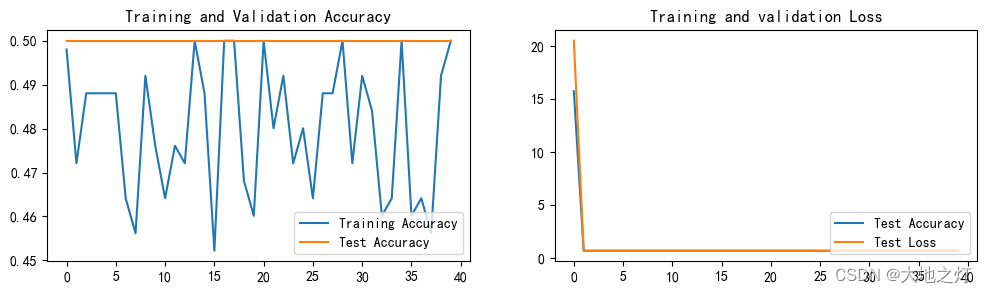

- 11.1 固定学习率

- 1e-1

- 1e-2

- 1e-3

- 1e-4

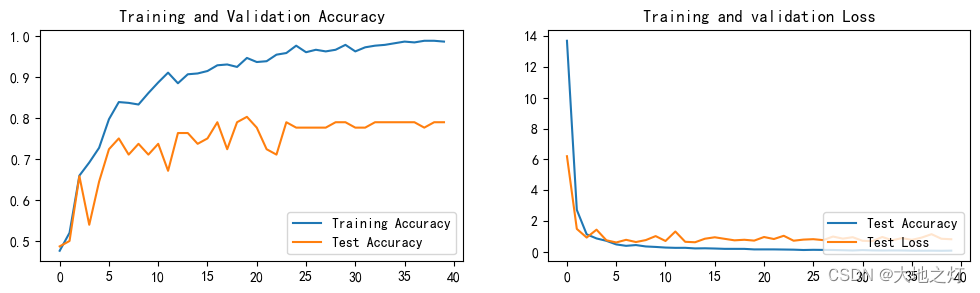

- 11.2 动态学习率

- 1e-1

- 1e-2

- 1e-3

- 1e-4

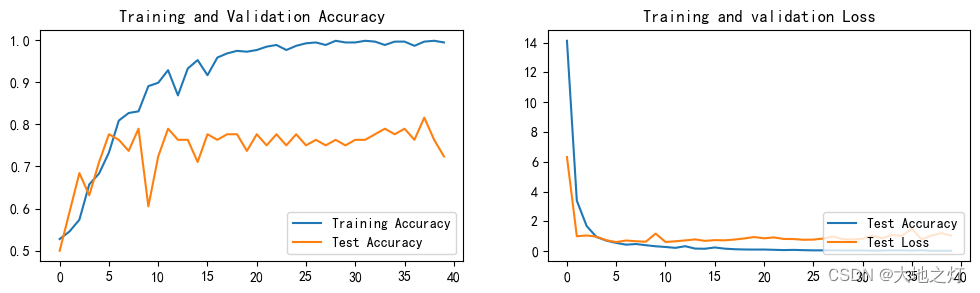

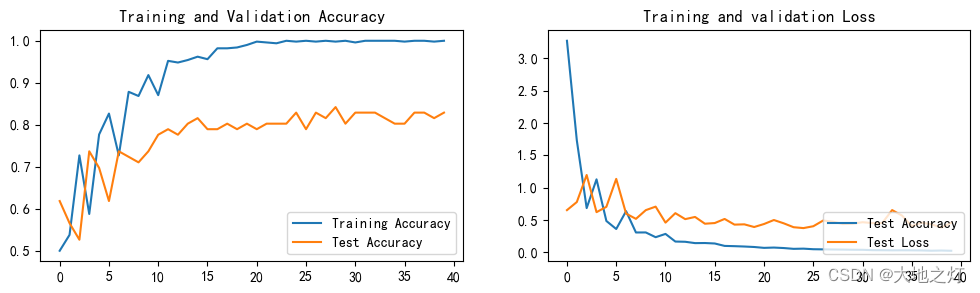

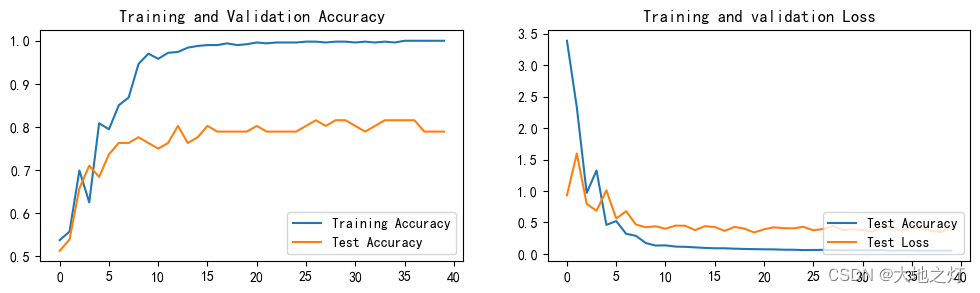

- 训练集水平翻转 + 动态学习率

- 1e-3

- 1e-4

0. 总结

数据导入及处理部分:本次数据导入没有使用torchvision自带的数据集,需要将原始数据进行处理包括数据导入,数据类型转换。

划分数据集:划定训练集测试集后,再使用torch.utils.data中的DataLoader()加载数据.注意:与之前不同的是,本次的数据集是已经划分好的。

模型构建部分:有两个部分一个初始化部分(init())列出了网络结构的所有层,比如卷积层池化层等。第二个部分是前向传播部分,定义了数据在各层的处理过程。注意:本次与刚开始学习时使用的方式不同,使用’nn.Sequential’,旨在将一系列模块或操作封装到一个紧凑的模块中。

设置超参数:在这之前需要定义损失函数,学习率,以及根据学习率定义优化器(例如SGD随机梯度下降),用来在训练中更新参数,最小化损失函数。注意:本次与之前不同的是使用了动态学习率

定义训练函数:函数的传入的参数有四个,分别是设置好的DataLoader(),定义好的模型,损失函数,优化器。函数内部初始化损失准确率为0,接着开始循环,使用DataLoader()获取一个批次的数据,对这个批次的数据带入模型得到预测值,然后使用损失函数计算得到损失值。接下来就是进行反向传播以及使用优化器优化参数,梯度清零放在反向传播之前或者是使用优化器优化之后都是可以的,一般是默认放在反向传播之前。

定义测试函数:函数传入的参数相比训练函数少了优化器,只需传入设置好的DataLoader(),定义好的模型,损失函数。此外除了处理批次数据时无需再设置梯度清零、返向传播以及优化器优化参数,其余部分均和训练函数保持一致。

训练过程:定义训练次数,有几次就使用整个数据集进行几次训练,初始化四个空list分别存储每次训练及测试的准确率及损失。使用model.train()开启训练模式,调用训练函数得到准确率及损失。使用model.eval()将模型设置为评估模式,调用测试函数得到准确率及损失。接着就是将得到的训练及测试的准确率及损失存储到相应list中并合并打印出来,得到每一次整体训练后的准确率及损失。学习优秀大佬的调试方案,达到优化目的。

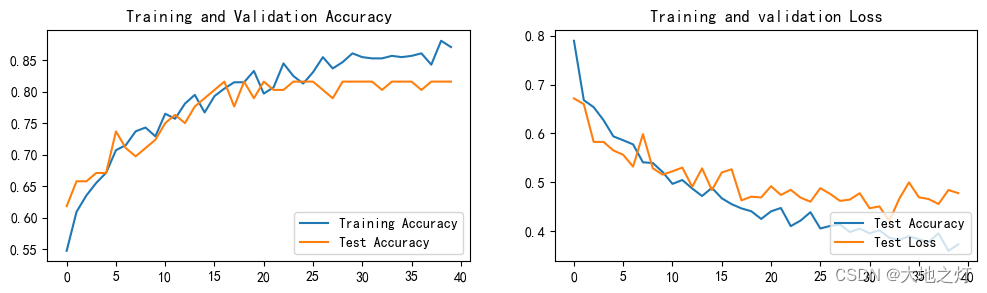

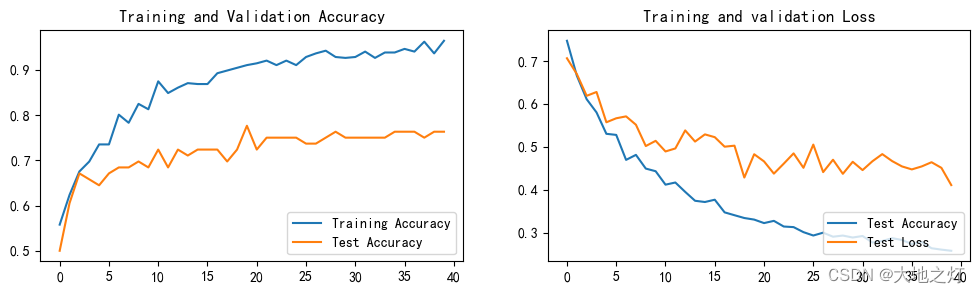

结果可视化

模型的保存,调取及使用。在PyTorch中,通常使用 torch.save(model.state_dict(), ‘model.pth’) 保存模型的参数,使用 model.load_state_dict(torch.load(‘model.pth’)) 加载参数。

需要改进优化的地方:在保证整体流程没有问题的情况下,继续细化细节研究,比如一些函数的原理及作用,如何提升训练集准确率等问题。以为训练集水平翻转等处理会降低测试集的准确率,但实际测试发现这种对训练集图片的水平翻转等处理可能会提升测试集预测的鲁棒性。

1. 数据导入及处理部分

import torch

import torch.nn as nn

import torchvision

from torchvision import transforms,datasets

import os,PIL,pathlib,random

import matplotlib.pyplot as plt

import warnings

warnings.filterwarnings("ignore") #忽略警告信息

plt.rcParams['font.sans-serif'] = ['SimHei'] # 用来正常显示中文标签

plt.rcParams['axes.unicode_minus'] = False # 用来正常显示负号

plt.rcParams['figure.dpi'] = 100 #分辨率

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

device

device(type='cuda')

# 查看数据分类

data_dir = './data/sneaker_recognize/test/'

data_dir = pathlib.Path(data_dir) # 使用pathlib.Path()函数将字符串类型的文件夹路径转换为pathlib.Path对象。

data_paths = list(data_dir.glob('*')) # 使用glob()方法获取data_dir路径下的所有文件路径,并以列表形式存储在data_paths中。

# classNames = [str(path).split('\\')[-1] for path in data_paths] # pathlib的.parts方法会返回路径各部分的一个元组

classNames = [path.parts[-1] for path in data_paths] # pathlib的.parts方法会返回路径各部分的一个元组

classNames

['adidas', 'nike']

train_transforms = transforms.Compose([

transforms.Resize([224,224]), # 将输入图片resize成统一尺寸

# transforms.RandomHorizontalFlip(), # 随机水平翻转

transforms.ToTensor(), # 将PIL Image或numpy.ndarray 转换为tensor,并归一化到[0,1]之间

transforms.Normalize( # 标准化处理-->转换为标准正太分布(高斯分布),使模型更容易收敛

mean = [0.485,0.456,0.406],

std = [0.229,0.224,0.225] # 其中 mean=[0.485,0.456,0.406]与std=[0.229,0.224,0.225] 从数据集中随机抽样计算得到的。

)

])

test_transforms = transforms.Compose([

transforms.Resize([224,224]),

transforms.ToTensor(),

transforms.Normalize(

mean = [0.485,0.456,0.406],

std = [0.229,0.224,0.225]

)

])

train_dataset = datasets.ImageFolder("./data/sneaker_recognize/train/",transform = train_transforms)

test_dataset = datasets.ImageFolder("./data/sneaker_recognize/test",transform = test_transforms)

train_dataset.class_to_idx

{'adidas': 0, 'nike': 1}

2. 加载数据集

# 使用dataloader加载数据,并设置好基本的batch_size

batch_size = 32

train_dl = torch.utils.data.DataLoader(

train_dataset,

batch_size = batch_size,

shuffle = True,

num_workers = 1

)

test_dl = torch.utils.data.DataLoader(

test_dataset,

batch_size = batch_size,

shuffle = True,

num_workers = 1

)

for X,y in test_dl:

print("Shape of X [N,C,H,W]: ",X.shape)

print("Shape of y: ",y.shape,y.dtype)

break

Shape of X [N,C,H,W]: torch.Size([32, 3, 224, 224])

Shape of y: torch.Size([32]) torch.int64

3.模型构建部分

3.1 模型构建

'nn.Sequential’是PyTorch提供的一个方便的容器模块,旨在将一系列模块或操作封装到一个紧凑的模块中。当你使用’nn.Sequential’时,您可以按照应该执行的顺序堆叠各种神经网络组件(如层、激活函数和规范化层),这简化了模型的构造和可读性。

import torch.nn.functional as F

class Model(nn.Module):

def __init__(self):

super(Model,self).__init__()

self.conv1 = nn.Sequential(

nn.Conv2d(3,12,kernel_size = 5,padding=0), # 12 * 220 * 220

nn.BatchNorm2d(12),

nn.ReLU()

)

self.conv2 = nn.Sequential(

nn.Conv2d(12,12,kernel_size = 5,padding = 0), # 12 * 216 * 216

nn.BatchNorm2d(12),

nn.ReLU()

)

self.pool3 = nn.Sequential(

nn.MaxPool2d(2) # 12 * 108 * 108

)

self.conv4 = nn.Sequential(

nn.Conv2d(12,24,kernel_size = 5,padding = 0), # 24 * 104 * 104

nn.BatchNorm2d(24),

nn.ReLU()

)

self.conv5 = nn.Sequential(

nn.Conv2d(24,24,kernel_size = 5,padding = 0),

nn.BatchNorm2d(24),

nn.ReLU()

)

self.pool6 = nn.Sequential(

nn.MaxPool2d(2)

)

self.dropout = nn.Sequential(

nn.Dropout(0.2)

)

self.fc = nn.Sequential(

nn.Linear(24*50*50,len(classNames))

)

def forward(self,x):

batch_size = x.size(0)

x = self.conv1(x) # 卷积-BN-激活

x = self.conv2(x) # 卷积-BN-激活

x = self.pool3(x) # 池化

x = self.conv4(x) # 卷积-BN-激活

x = self.conv5(x) # 卷积-BN-激活

x = self.pool6(x) # 池化

x = self.dropout(x)

x = x.view(batch_size,-1) # flatten 变成全连接网络的重要输入()(batch, 24*50*50) ==> (batch, -1), -1 此处自动算出的是24*50*50

x = self.fc(x)

return x

print("Using {} device".format(device))

model = Model().to(device)

model

Using cuda device

Model(

(conv1): Sequential(

(0): Conv2d(3, 12, kernel_size=(5, 5), stride=(1, 1))

(1): BatchNorm2d(12, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU()

)

(conv2): Sequential(

(0): Conv2d(12, 12, kernel_size=(5, 5), stride=(1, 1))

(1): BatchNorm2d(12, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU()

)

(pool3): Sequential(

(0): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

(conv4): Sequential(

(0): Conv2d(12, 24, kernel_size=(5, 5), stride=(1, 1))

(1): BatchNorm2d(24, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU()

)

(conv5): Sequential(

(0): Conv2d(24, 24, kernel_size=(5, 5), stride=(1, 1))

(1): BatchNorm2d(24, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU()

)

(pool6): Sequential(

(0): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

(dropout): Sequential(

(0): Dropout(p=0.2, inplace=False)

)

(fc): Sequential(

(0): Linear(in_features=60000, out_features=2, bias=True)

)

)

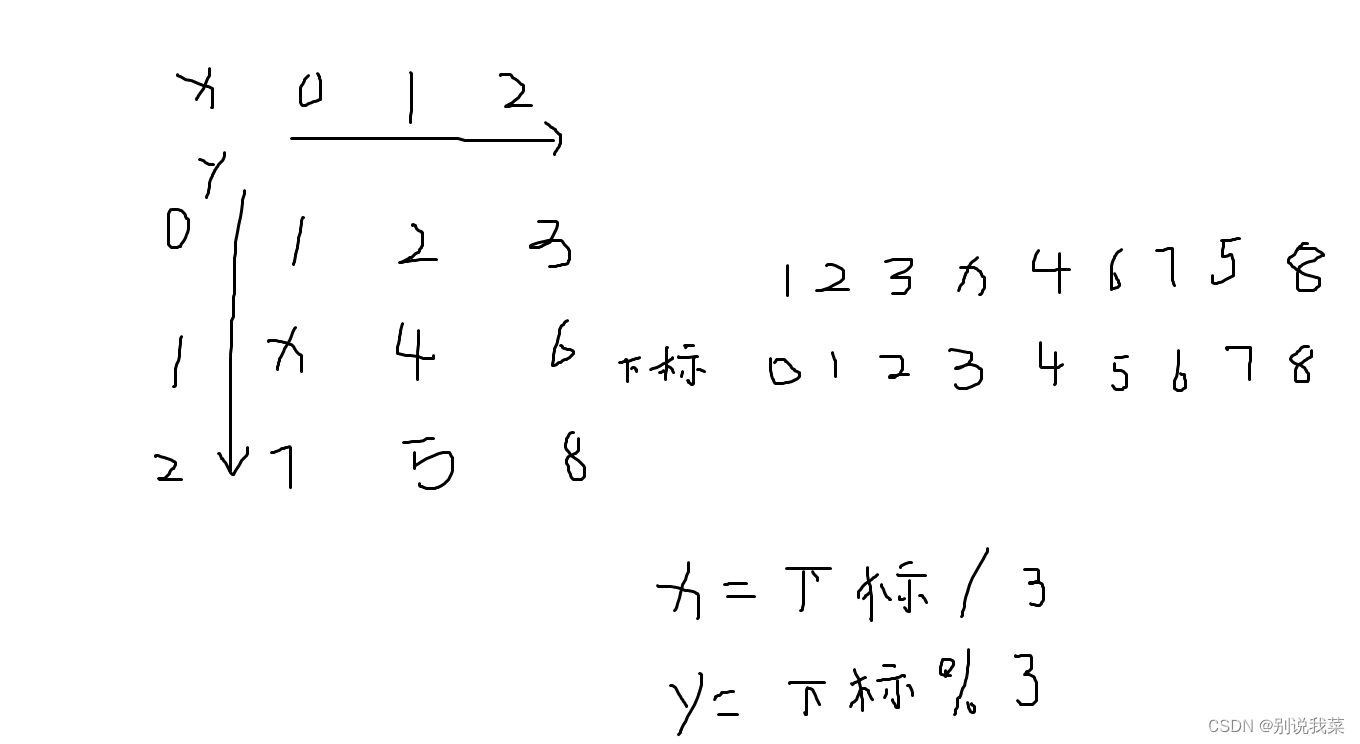

3.2 公式推导

3, 224, 224(输入数据)

-> 12, 220, 220(经过卷积层1)

-> 12, 216, 216(经过卷积层2)-> 12, 108, 108(经过池化层1)

-> 24, 104, 104(经过卷积层3)

-> 24, 100, 100(经过卷积层4)-> 24, 50, 50(经过池化层2)

-> 60000 -> num_classes(2)

计算公式:

卷积维度计算公式:

-

高度方向: H o u t = ( H i n − K e r n e l _ s i z e + 2 × p a d d i n g ) s t r i d e + 1 H_{out} = \frac{\left(H_{in} - Kernel\_size + 2\times padding\right)}{stride} + 1 Hout=stride(Hin−Kernel_size+2×padding)+1

-

宽度方向: W o u t = ( W i n − K e r n e l _ s i z e + 2 × p a d d i n g ) s t r i d e + 1 W_{out} = \frac{\left(W_{in} - Kernel\_size + 2\times padding\right)}{stride} + 1 Wout=stride(Win−Kernel_size+2×padding)+1

-

卷积层通道数变化:数据通道数为卷积层该卷积层定义的输出通道数,例如:self.conv1 = nn.Conv2d(3,64,kernel_size = 3)。在这个例子中,输出的通道数为64,这意味着卷积层使用了64个不同的卷积核,每个核都在输入数据上独立进行卷积运算,产生一个新的通道。需要注意,卷积操作不是在单独的通道上进行的,而是跨所有输入通道(本例中为3个通道)进行的,每个卷积核提供一个新的输出通道。

池化层计算公式:

-

高度方向: H o u t = ( H i n + 2 × p a d d i n g H − d i l a t i o n H × ( k e r n e l _ s i z e H − 1 ) − 1 s t r i d e H + 1 ) H_{out} = \left(\frac{H_{in} + 2 \times padding_H - dilation_H \times (kernel\_size_H - 1) - 1}{stride_H} + 1 \right) Hout=(strideHHin+2×paddingH−dilationH×(kernel_sizeH−1)−1+1)

-

宽度方向: W o u t = ( W i n + 2 × p a d d i n g W − d i l a t i o n W × ( k e r n e l _ s i z e W − 1 ) − 1 s t r i d e W + 1 ) W_{out} = \left( \frac{W_{in} + 2 \times padding_W - dilation_W \times (kernel\_size_W - 1) - 1}{stride_W} + 1 \right) Wout=(strideWWin+2×paddingW−dilationW×(kernel_sizeW−1)−1+1)

其中:

- H i n H_{in} Hin 和 W i n W_{in} Win 是输入的高度和宽度。

- p a d d i n g H padding_H paddingH 和 p a d d i n g W padding_W paddingW 是在高度和宽度方向上的填充量。

- k e r n e l _ s i z e H kernel\_size_H kernel_sizeH 和 k e r n e l _ s i z e W kernel\_size_W kernel_sizeW 是卷积核或池化核在高度和宽度方向上的大小。

- s t r i d e H stride_H strideH 和 s t r i d e W stride_W strideW 是在高度和宽度方向上的步长。

- d i l a t i o n H dilation_H dilationH 和 d i l a t i o n W dilation_W dilationW 是在高度和宽度方向上的膨胀系数。

请注意,这里的膨胀系数 $dilation \times (kernel_size - 1) $实际上表示核在膨胀后覆盖的区域大小。例如,一个 $3 \times 3 $ 的核,如果膨胀系数为2,则实际上它覆盖的区域大小为$ 5 \times 5 $(原始核大小加上膨胀引入的间隔)。

4. 设置超参数(设置初始学习率,动态学习率,梯度下降优化器,损失函数)

4.1 设置设置初始学习率,动态学习率,梯度下降优化器,损失函数

loss_fn = nn.CrossEntropyLoss() # 创建损失函数

def adjust_learning_rate(optimizer,epoch,start_lr):

# 每两个epoch 衰减到原来的 0.98

lr = start_lr * (0.92**(epoch//2))

for param_group in optimizer.param_groups:

param_group['lr'] = lr

learn_rate = 1e-3 # 初始学习率

optimizer = torch.optim.SGD(model.parameters(),lr = learn_rate)

# 官方方法动态学习接口调用示例

# loss_fn = nn.CrossEntropyLoss() # 创建损失函数

# lambda1 = lambda epoch: (0.92 ** (eopch // 2))

# learn_rate = 1e-4 # 初始学习率

# optimizer = torch.optim.SGD(model.parameters(),lr = learn_rate)

# scheduler = torch.optim.lr_scheduler.LambdaLR(optimizer,lr_lambda = lambda1) # 选定调整方法

4.2 动态学习率代码说明

计算举例:

假定初始学习率(start_lr)是0.01. 这是前10个epoch学习率的变化情况:

- Epoch 0: ( 0.01 × 0.9 2 ( 0 / / 2 ) = 0.01 × 0.9 2 0 = 0.01 (0.01 \times 0.92^{(0 // 2)} = 0.01 \times 0.92^{0} = 0.01 (0.01×0.92(0//2)=0.01×0.920=0.01

- Epoch 1: ( 0.01 × 0.9 2 ( 1 / / 2 ) = 0.01 × 0.9 2 0 = 0.01 (0.01 \times 0.92^{(1 // 2)} = 0.01 \times 0.92^{0} = 0.01 (0.01×0.92(1//2)=0.01×0.920=0.01

- Epoch 2: ( 0.01 × 0.9 2 ( 2 / / 2 ) = 0.01 × 0.9 2 1 = 0.0092 (0.01 \times 0.92^{(2 // 2)} = 0.01 \times 0.92^{1} = 0.0092 (0.01×0.92(2//2)=0.01×0.921=0.0092

- Epoch 3: ( 0.01 × 0.9 2 ( 3 / / 2 ) = 0.01 × 0.9 2 1 = 0.0092 (0.01 \times 0.92^{(3 // 2)} = 0.01 \times 0.92^{1} = 0.0092 (0.01×0.92(3//2)=0.01×0.921=0.0092

- Epoch 4: ( 0.01 × 0.9 2 ( 4 / / 2 ) = 0.01 × 0.9 2 2 = 0.008464 (0.01 \times 0.92^{(4 // 2)} = 0.01 \times 0.92^{2} = 0.008464 (0.01×0.92(4//2)=0.01×0.922=0.008464

- Epoch 5: ( 0.01 × 0.9 2 ( 5 / / 2 ) = 0.01 × 0.9 2 2 = 0.008464 (0.01 \times 0.92^{(5 // 2)} = 0.01 \times 0.92^{2} = 0.008464 (0.01×0.92(5//2)=0.01×0.922=0.008464

- Epoch 6: ( 0.01 × 0.9 2 ( 6 / / 2 ) = 0.01 × 0.9 2 3 = 0.007867 (0.01 \times 0.92^{(6 // 2)} = 0.01 \times 0.92^{3} = 0.007867 (0.01×0.92(6//2)=0.01×0.923=0.007867

- Epoch 7: ( 0.01 × 0.9 2 ( 7 / / 2 ) = 0.01 × 0.9 2 3 = 0.007867 (0.01 \times 0.92^{(7 // 2)} = 0.01 \times 0.92^{3} = 0.007867 (0.01×0.92(7//2)=0.01×0.923=0.007867

- Epoch 8: ( 0.01 × 0.9 2 ( 8 / / 2 ) = 0.01 × 0.9 2 4 = 0.007238 (0.01 \times 0.92^{(8 // 2)} = 0.01 \times 0.92^{4} = 0.007238 (0.01×0.92(8//2)=0.01×0.924=0.007238

- Epoch 9: ( 0.01 × 0.9 2 ( 9 / / 2 ) = 0.01 × 0.9 2 4 = 0.007238 (0.01 \times 0.92^{(9 // 2)} = 0.01 \times 0.92^{4} = 0.007238 (0.01×0.92(9//2)=0.01×0.924=0.007238

从计算中可以看出,学习率每两个epoch下降一次。这种逐渐减少有助于微调神经网络的权重,特别是当它开始收敛到最小损失时。降低学习率可以帮助避免超过这个最小值,潜在地导致更好和更稳定的训练结果。

5. 训练函数

# 训练循环

def train(dataloader,model,loss_fn,optimizer):

size = len(dataloader.dataset) # 训练集的大小

num_batches = len(dataloader) # 批次数目

train_loss,train_acc = 0,0 # 初始话训练损失和正确率

for X,y in dataloader: # 获取图片和标签

X,y = X.to(device),y.to(device)

# 计算预测误差

pred = model(X)

loss = loss_fn(pred,y)

# 反向传播

optimizer.zero_grad()

loss.backward()

optimizer.step()

# 记录acc与loss

train_acc += (pred.argmax(1)==y).type(torch.float).sum().item()

train_loss += loss.item()

train_acc /= size

train_loss /= num_batches

return train_acc,train_loss

6. 测试函数

# 测试函数

def test(dataloader,model,loss_fn):

size = len(dataloader.dataset)

num_batches = len(dataloader)

test_acc,test_loss = 0,0

with torch.no_grad():

for X,y in dataloader:

X,y = X.to(device),y.to(device)

# 计算loss

pred = model(X)

loss = loss_fn(pred,y)

test_acc += (pred.argmax(1) == y).type(torch.float).sum().item()

test_loss += loss.item()

test_acc /= size

test_loss /= num_batches

return test_acc,test_loss

7. 正式训练

epochs = 40

train_acc = []

train_loss = []

test_acc = []

test_loss = []

for epoch in range(epochs):

# 更新学历率(使用自定义学习率时使用)

adjust_learning_rate(optimizer,epoch,learn_rate)

model.train()

epoch_train_acc,epoch_train_loss = train(train_dl,model,loss_fn,optimizer)

# scheduler.step() # 更新学习率(调用官方动态学习率接口时使用)

model.eval()

epoch_test_acc,epoch_test_loss = test(test_dl,model,loss_fn)

train_acc.append(epoch_train_acc)

train_loss.append(epoch_train_loss)

test_acc.append(epoch_test_acc)

test_loss.append(epoch_test_loss)

# 获取当前的学习率

lr = optimizer.state_dict()['param_groups'][0]['lr']

template = ('Epoch:{:2d}, Train_acc:{:.1f}%,Train_loss:{:.3f},Test_acc:{:.1f}%,Test_loss:{:.3f},Lr:{:.2E}')

print(template.format(epoch+1,epoch_train_acc * 100 ,epoch_train_loss,epoch_test_acc * 100,epoch_test_loss,lr))

print('Done')

Epoch: 1, Train_acc:53.8%,Train_loss:3.389,Test_acc:51.3%,Test_loss:0.933,Lr:1.00E-03

Epoch: 2, Train_acc:55.8%,Train_loss:2.331,Test_acc:53.9%,Test_loss:1.597,Lr:1.00E-03

Epoch: 3, Train_acc:69.9%,Train_loss:0.969,Test_acc:65.8%,Test_loss:0.796,Lr:9.20E-04

Epoch: 4, Train_acc:62.5%,Train_loss:1.327,Test_acc:71.1%,Test_loss:0.686,Lr:9.20E-04

Epoch: 5, Train_acc:80.9%,Train_loss:0.462,Test_acc:68.4%,Test_loss:1.011,Lr:8.46E-04

Epoch: 6, Train_acc:79.5%,Train_loss:0.522,Test_acc:73.7%,Test_loss:0.564,Lr:8.46E-04

Epoch: 7, Train_acc:85.1%,Train_loss:0.321,Test_acc:76.3%,Test_loss:0.680,Lr:7.79E-04

Epoch: 8, Train_acc:86.9%,Train_loss:0.288,Test_acc:76.3%,Test_loss:0.467,Lr:7.79E-04

Epoch: 9, Train_acc:94.6%,Train_loss:0.173,Test_acc:77.6%,Test_loss:0.425,Lr:7.16E-04

Epoch:10, Train_acc:97.0%,Train_loss:0.134,Test_acc:76.3%,Test_loss:0.439,Lr:7.16E-04

Epoch:11, Train_acc:95.8%,Train_loss:0.136,Test_acc:75.0%,Test_loss:0.400,Lr:6.59E-04

Epoch:12, Train_acc:97.2%,Train_loss:0.117,Test_acc:76.3%,Test_loss:0.450,Lr:6.59E-04

Epoch:13, Train_acc:97.4%,Train_loss:0.113,Test_acc:80.3%,Test_loss:0.448,Lr:6.06E-04

Epoch:14, Train_acc:98.4%,Train_loss:0.104,Test_acc:76.3%,Test_loss:0.379,Lr:6.06E-04

Epoch:15, Train_acc:98.8%,Train_loss:0.096,Test_acc:77.6%,Test_loss:0.441,Lr:5.58E-04

Epoch:16, Train_acc:99.0%,Train_loss:0.090,Test_acc:80.3%,Test_loss:0.428,Lr:5.58E-04

Epoch:17, Train_acc:99.0%,Train_loss:0.090,Test_acc:78.9%,Test_loss:0.367,Lr:5.13E-04

Epoch:18, Train_acc:99.4%,Train_loss:0.084,Test_acc:78.9%,Test_loss:0.429,Lr:5.13E-04

Epoch:19, Train_acc:99.0%,Train_loss:0.079,Test_acc:78.9%,Test_loss:0.403,Lr:4.72E-04

Epoch:20, Train_acc:99.2%,Train_loss:0.076,Test_acc:78.9%,Test_loss:0.341,Lr:4.72E-04

Epoch:21, Train_acc:99.6%,Train_loss:0.073,Test_acc:80.3%,Test_loss:0.392,Lr:4.34E-04

Epoch:22, Train_acc:99.4%,Train_loss:0.073,Test_acc:78.9%,Test_loss:0.424,Lr:4.34E-04

Epoch:23, Train_acc:99.6%,Train_loss:0.067,Test_acc:78.9%,Test_loss:0.409,Lr:4.00E-04

Epoch:24, Train_acc:99.6%,Train_loss:0.067,Test_acc:78.9%,Test_loss:0.407,Lr:4.00E-04

Epoch:25, Train_acc:99.6%,Train_loss:0.061,Test_acc:78.9%,Test_loss:0.431,Lr:3.68E-04

Epoch:26, Train_acc:99.8%,Train_loss:0.063,Test_acc:80.3%,Test_loss:0.376,Lr:3.68E-04

Epoch:27, Train_acc:99.8%,Train_loss:0.065,Test_acc:81.6%,Test_loss:0.397,Lr:3.38E-04

Epoch:28, Train_acc:99.6%,Train_loss:0.060,Test_acc:80.3%,Test_loss:0.446,Lr:3.38E-04

Epoch:29, Train_acc:99.8%,Train_loss:0.057,Test_acc:81.6%,Test_loss:0.378,Lr:3.11E-04

Epoch:30, Train_acc:99.8%,Train_loss:0.058,Test_acc:81.6%,Test_loss:0.391,Lr:3.11E-04

Epoch:31, Train_acc:99.6%,Train_loss:0.057,Test_acc:80.3%,Test_loss:0.380,Lr:2.86E-04

Epoch:32, Train_acc:99.8%,Train_loss:0.056,Test_acc:78.9%,Test_loss:0.353,Lr:2.86E-04

Epoch:33, Train_acc:99.6%,Train_loss:0.059,Test_acc:80.3%,Test_loss:0.438,Lr:2.63E-04

Epoch:34, Train_acc:99.8%,Train_loss:0.051,Test_acc:81.6%,Test_loss:0.420,Lr:2.63E-04

Epoch:35, Train_acc:99.6%,Train_loss:0.055,Test_acc:81.6%,Test_loss:0.368,Lr:2.42E-04

Epoch:36, Train_acc:100.0%,Train_loss:0.053,Test_acc:81.6%,Test_loss:0.417,Lr:2.42E-04

Epoch:37, Train_acc:100.0%,Train_loss:0.050,Test_acc:81.6%,Test_loss:0.412,Lr:2.23E-04

Epoch:38, Train_acc:100.0%,Train_loss:0.052,Test_acc:78.9%,Test_loss:0.366,Lr:2.23E-04

Epoch:39, Train_acc:100.0%,Train_loss:0.051,Test_acc:78.9%,Test_loss:0.349,Lr:2.05E-04

Epoch:40, Train_acc:100.0%,Train_loss:0.051,Test_acc:78.9%,Test_loss:0.427,Lr:2.05E-04

Done

8. 结果与可视化

epochs_range = range(epochs)

plt.figure(figsize = (12,3))

plt.subplot(1,2,1)

plt.plot(epochs_range,train_acc,label = 'Training Accuracy')

plt.plot(epochs_range,test_acc,label = 'Test Accuracy')

plt.legend(loc = 'lower right')

plt.title('Training and Validation Accuracy')

plt.subplot(1,2,2)

plt.plot(epochs_range,train_loss,label = 'Test Accuracy')

plt.plot(epochs_range,test_loss,label = 'Test Loss')

plt.legend(loc = 'lower right')

plt.title('Training and validation Loss')

plt.show()

9. 保存并加载模型

# 模型保存

# torch.save(model.'sneaker_rec_model.pth') # 保存整个模型

# 模型加载

# model2 = torch.load('sneaker_rec_model.pth')

# model2 = model2.to(device) # 理论上在哪里保存模型,加载模型也会优先在哪里,指定一下确保不会出错

# 状态字典保存

torch.save(model.state_dict(),'sneaker_rec_model_state_dict.pth') # 仅保存状态字典

# 加载状态字典到模型

model2 = Model().to(device) # 重新定义一个模型用来加载参数

model2.load_state_dict(torch.load('sneaker_rec_model_state_dict.pth')) # 加载状态字典到模型

<All keys matched successfully>

10. 使用训练好的模型进行预测

# 指定路径图片预测

from PIL import Image

import torchvision.transforms as transforms

classes = list(train_dataset.class_to_idx) # classes = list(total_data.class_to_idx)

def predict_one_image(image_path,model,transform,classes):

test_img = Image.open(image_path).convert('RGB')

# plt.imshow(test_img) # 展示待预测的图片

test_img = transform(test_img)

img = test_img.to(device).unsqueeze(0)

model.eval()

output = model(img)

print(output) # 观察模型预测结果的输出数据

_,pred = torch.max(output,1)

pred_class = classes[pred]

print(f'预测结果是:{pred_class}')

# 预测训练集中的某张照片

predict_one_image(image_path='./data/sneaker_recognize/test/adidas/1.jpg',

model = model,

transform = test_transforms,

classes = classes

)

tensor([[ 0.2667, -0.2810]], device='cuda:0', grad_fn=<AddmmBackward0>)

预测结果是:adidas

11.不同参数模型预测

11.1 固定学习率

1e-1

Epoch: 1, Train_acc:47.2%,Train_loss:22.085,Test_acc:39.5%,Test_loss:16.832,Lr:1.00E-01

Epoch: 2, Train_acc:48.0%,Train_loss:0.695,Test_acc:51.3%,Test_loss:0.694,Lr:1.00E-01

Epoch: 3, Train_acc:47.2%,Train_loss:0.698,Test_acc:50.0%,Test_loss:0.694,Lr:1.00E-01

Epoch: 4, Train_acc:49.2%,Train_loss:0.694,Test_acc:50.0%,Test_loss:0.694,Lr:1.00E-01

Epoch: 5, Train_acc:49.2%,Train_loss:0.694,Test_acc:50.0%,Test_loss:0.693,Lr:1.00E-01

Epoch: 6, Train_acc:48.8%,Train_loss:0.694,Test_acc:50.0%,Test_loss:0.693,Lr:1.00E-01

Epoch: 7, Train_acc:48.0%,Train_loss:0.694,Test_acc:50.0%,Test_loss:0.693,Lr:1.00E-01

Epoch: 8, Train_acc:49.6%,Train_loss:0.694,Test_acc:50.0%,Test_loss:0.693,Lr:1.00E-01

Epoch: 9, Train_acc:50.8%,Train_loss:0.694,Test_acc:50.0%,Test_loss:0.692,Lr:1.00E-01

Epoch:10, Train_acc:46.8%,Train_loss:0.694,Test_acc:50.0%,Test_loss:0.693,Lr:1.00E-01

Epoch:11, Train_acc:49.2%,Train_loss:0.694,Test_acc:50.0%,Test_loss:0.693,Lr:1.00E-01

Epoch:12, Train_acc:48.4%,Train_loss:0.694,Test_acc:50.0%,Test_loss:0.693,Lr:1.00E-01

Epoch:13, Train_acc:50.0%,Train_loss:0.693,Test_acc:50.0%,Test_loss:0.693,Lr:1.00E-01

Epoch:14, Train_acc:50.0%,Train_loss:0.694,Test_acc:50.0%,Test_loss:0.693,Lr:1.00E-01

Epoch:15, Train_acc:46.4%,Train_loss:0.692,Test_acc:50.0%,Test_loss:0.693,Lr:1.00E-01

Epoch:16, Train_acc:47.6%,Train_loss:0.694,Test_acc:50.0%,Test_loss:0.693,Lr:1.00E-01

Epoch:17, Train_acc:46.8%,Train_loss:0.694,Test_acc:50.0%,Test_loss:0.693,Lr:1.00E-01

Epoch:18, Train_acc:45.8%,Train_loss:0.694,Test_acc:50.0%,Test_loss:0.694,Lr:1.00E-01

Epoch:19, Train_acc:49.2%,Train_loss:0.694,Test_acc:50.0%,Test_loss:0.693,Lr:1.00E-01

Epoch:20, Train_acc:48.0%,Train_loss:0.694,Test_acc:50.0%,Test_loss:0.694,Lr:1.00E-01

Epoch:21, Train_acc:46.4%,Train_loss:0.694,Test_acc:50.0%,Test_loss:0.693,Lr:1.00E-01

Epoch:22, Train_acc:47.2%,Train_loss:0.694,Test_acc:50.0%,Test_loss:0.693,Lr:1.00E-01

Epoch:23, Train_acc:50.0%,Train_loss:0.694,Test_acc:50.0%,Test_loss:0.694,Lr:1.00E-01

Epoch:24, Train_acc:48.8%,Train_loss:0.694,Test_acc:50.0%,Test_loss:0.693,Lr:1.00E-01

Epoch:25, Train_acc:50.4%,Train_loss:0.694,Test_acc:50.0%,Test_loss:0.693,Lr:1.00E-01

Epoch:26, Train_acc:50.0%,Train_loss:0.693,Test_acc:50.0%,Test_loss:0.694,Lr:1.00E-01

Epoch:27, Train_acc:50.4%,Train_loss:0.696,Test_acc:50.0%,Test_loss:0.692,Lr:1.00E-01

Epoch:28, Train_acc:47.4%,Train_loss:0.694,Test_acc:50.0%,Test_loss:0.694,Lr:1.00E-01

Epoch:29, Train_acc:47.6%,Train_loss:0.694,Test_acc:50.0%,Test_loss:0.694,Lr:1.00E-01

Epoch:30, Train_acc:48.8%,Train_loss:0.694,Test_acc:50.0%,Test_loss:0.693,Lr:1.00E-01

Epoch:31, Train_acc:49.6%,Train_loss:0.694,Test_acc:50.0%,Test_loss:0.693,Lr:1.00E-01

Epoch:32, Train_acc:49.6%,Train_loss:0.693,Test_acc:50.0%,Test_loss:0.693,Lr:1.00E-01

Epoch:33, Train_acc:49.6%,Train_loss:0.694,Test_acc:50.0%,Test_loss:0.692,Lr:1.00E-01

Epoch:34, Train_acc:48.8%,Train_loss:0.694,Test_acc:50.0%,Test_loss:0.692,Lr:1.00E-01

Epoch:35, Train_acc:50.0%,Train_loss:0.694,Test_acc:50.0%,Test_loss:0.693,Lr:1.00E-01

Epoch:36, Train_acc:49.6%,Train_loss:0.696,Test_acc:50.0%,Test_loss:0.693,Lr:1.00E-01

Epoch:37, Train_acc:50.8%,Train_loss:0.694,Test_acc:50.0%,Test_loss:0.693,Lr:1.00E-01

Epoch:38, Train_acc:47.6%,Train_loss:0.694,Test_acc:50.0%,Test_loss:0.693,Lr:1.00E-01

Epoch:39, Train_acc:50.0%,Train_loss:0.694,Test_acc:50.0%,Test_loss:0.694,Lr:1.00E-01

Epoch:40, Train_acc:50.0%,Train_loss:0.694,Test_acc:50.0%,Test_loss:0.693,Lr:1.00E-01

Done

1e-2

Epoch: 1, Train_acc:52.8%,Train_loss:14.123,Test_acc:50.0%,Test_loss:6.319,Lr:1.00E-02

Epoch: 2, Train_acc:54.6%,Train_loss:3.390,Test_acc:59.2%,Test_loss:1.002,Lr:1.00E-02

Epoch: 3, Train_acc:57.4%,Train_loss:1.683,Test_acc:68.4%,Test_loss:1.051,Lr:1.00E-02

Epoch: 4, Train_acc:65.7%,Train_loss:0.947,Test_acc:63.2%,Test_loss:0.971,Lr:1.00E-02

Epoch: 5, Train_acc:68.3%,Train_loss:0.705,Test_acc:71.1%,Test_loss:0.732,Lr:1.00E-02

Epoch: 6, Train_acc:73.3%,Train_loss:0.552,Test_acc:77.6%,Test_loss:0.609,Lr:1.00E-02

Epoch: 7, Train_acc:80.9%,Train_loss:0.435,Test_acc:76.3%,Test_loss:0.713,Lr:1.00E-02

Epoch: 8, Train_acc:82.7%,Train_loss:0.478,Test_acc:73.7%,Test_loss:0.665,Lr:1.00E-02

Epoch: 9, Train_acc:83.1%,Train_loss:0.398,Test_acc:78.9%,Test_loss:0.629,Lr:1.00E-02

Epoch:10, Train_acc:89.0%,Train_loss:0.325,Test_acc:60.5%,Test_loss:1.178,Lr:1.00E-02

Epoch:11, Train_acc:89.8%,Train_loss:0.280,Test_acc:72.4%,Test_loss:0.615,Lr:1.00E-02

Epoch:12, Train_acc:92.8%,Train_loss:0.219,Test_acc:78.9%,Test_loss:0.663,Lr:1.00E-02

Epoch:13, Train_acc:86.9%,Train_loss:0.331,Test_acc:76.3%,Test_loss:0.718,Lr:1.00E-02

Epoch:14, Train_acc:93.2%,Train_loss:0.171,Test_acc:76.3%,Test_loss:0.788,Lr:1.00E-02

Epoch:15, Train_acc:95.2%,Train_loss:0.160,Test_acc:71.1%,Test_loss:0.689,Lr:1.00E-02

Epoch:16, Train_acc:91.6%,Train_loss:0.247,Test_acc:77.6%,Test_loss:0.738,Lr:1.00E-02

Epoch:17, Train_acc:95.8%,Train_loss:0.166,Test_acc:76.3%,Test_loss:0.726,Lr:1.00E-02

Epoch:18, Train_acc:96.8%,Train_loss:0.129,Test_acc:77.6%,Test_loss:0.777,Lr:1.00E-02

Epoch:19, Train_acc:97.4%,Train_loss:0.110,Test_acc:77.6%,Test_loss:0.853,Lr:1.00E-02

Epoch:20, Train_acc:97.2%,Train_loss:0.105,Test_acc:73.7%,Test_loss:0.945,Lr:1.00E-02

Epoch:21, Train_acc:97.6%,Train_loss:0.107,Test_acc:77.6%,Test_loss:0.862,Lr:1.00E-02

Epoch:22, Train_acc:98.4%,Train_loss:0.089,Test_acc:75.0%,Test_loss:0.921,Lr:1.00E-02

Epoch:23, Train_acc:98.8%,Train_loss:0.071,Test_acc:77.6%,Test_loss:0.818,Lr:1.00E-02

Epoch:24, Train_acc:97.6%,Train_loss:0.084,Test_acc:75.0%,Test_loss:0.811,Lr:1.00E-02

Epoch:25, Train_acc:98.6%,Train_loss:0.064,Test_acc:77.6%,Test_loss:0.762,Lr:1.00E-02

Epoch:26, Train_acc:99.2%,Train_loss:0.052,Test_acc:75.0%,Test_loss:0.775,Lr:1.00E-02

Epoch:27, Train_acc:99.4%,Train_loss:0.053,Test_acc:76.3%,Test_loss:0.844,Lr:1.00E-02

Epoch:28, Train_acc:98.8%,Train_loss:0.051,Test_acc:75.0%,Test_loss:0.988,Lr:1.00E-02

Epoch:29, Train_acc:99.8%,Train_loss:0.045,Test_acc:76.3%,Test_loss:0.800,Lr:1.00E-02

Epoch:30, Train_acc:99.4%,Train_loss:0.044,Test_acc:75.0%,Test_loss:0.789,Lr:1.00E-02

Epoch:31, Train_acc:99.4%,Train_loss:0.044,Test_acc:76.3%,Test_loss:0.831,Lr:1.00E-02

Epoch:32, Train_acc:99.8%,Train_loss:0.029,Test_acc:76.3%,Test_loss:1.050,Lr:1.00E-02

Epoch:33, Train_acc:99.6%,Train_loss:0.034,Test_acc:77.6%,Test_loss:0.853,Lr:1.00E-02

Epoch:34, Train_acc:98.8%,Train_loss:0.050,Test_acc:78.9%,Test_loss:1.084,Lr:1.00E-02

Epoch:35, Train_acc:99.6%,Train_loss:0.049,Test_acc:77.6%,Test_loss:1.032,Lr:1.00E-02

Epoch:36, Train_acc:99.6%,Train_loss:0.034,Test_acc:78.9%,Test_loss:1.497,Lr:1.00E-02

Epoch:37, Train_acc:98.6%,Train_loss:0.056,Test_acc:76.3%,Test_loss:0.785,Lr:1.00E-02

Epoch:38, Train_acc:99.6%,Train_loss:0.025,Test_acc:81.6%,Test_loss:1.048,Lr:1.00E-02

Epoch:39, Train_acc:99.8%,Train_loss:0.024,Test_acc:76.3%,Test_loss:1.205,Lr:1.00E-02

Epoch:40, Train_acc:99.4%,Train_loss:0.024,Test_acc:72.4%,Test_loss:1.039,Lr:1.00E-02

Done

1e-3

Epoch: 1, Train_acc:50.0%,Train_loss:3.273,Test_acc:61.8%,Test_loss:0.652,Lr:1.00E-03

Epoch: 2, Train_acc:53.8%,Train_loss:1.732,Test_acc:56.6%,Test_loss:0.777,Lr:1.00E-03

Epoch: 3, Train_acc:72.7%,Train_loss:0.683,Test_acc:52.6%,Test_loss:1.196,Lr:1.00E-03

Epoch: 4, Train_acc:58.8%,Train_loss:1.128,Test_acc:73.7%,Test_loss:0.621,Lr:1.00E-03

Epoch: 5, Train_acc:77.7%,Train_loss:0.484,Test_acc:69.7%,Test_loss:0.703,Lr:1.00E-03

Epoch: 6, Train_acc:82.7%,Train_loss:0.360,Test_acc:61.8%,Test_loss:1.136,Lr:1.00E-03

Epoch: 7, Train_acc:72.7%,Train_loss:0.636,Test_acc:73.7%,Test_loss:0.598,Lr:1.00E-03

Epoch: 8, Train_acc:87.8%,Train_loss:0.306,Test_acc:72.4%,Test_loss:0.517,Lr:1.00E-03

Epoch: 9, Train_acc:86.9%,Train_loss:0.307,Test_acc:71.1%,Test_loss:0.652,Lr:1.00E-03

Epoch:10, Train_acc:91.8%,Train_loss:0.233,Test_acc:73.7%,Test_loss:0.706,Lr:1.00E-03

Epoch:11, Train_acc:87.1%,Train_loss:0.284,Test_acc:77.6%,Test_loss:0.459,Lr:1.00E-03

Epoch:12, Train_acc:95.2%,Train_loss:0.166,Test_acc:78.9%,Test_loss:0.605,Lr:1.00E-03

Epoch:13, Train_acc:94.8%,Train_loss:0.162,Test_acc:77.6%,Test_loss:0.513,Lr:1.00E-03

Epoch:14, Train_acc:95.4%,Train_loss:0.141,Test_acc:80.3%,Test_loss:0.546,Lr:1.00E-03

Epoch:15, Train_acc:96.2%,Train_loss:0.143,Test_acc:81.6%,Test_loss:0.442,Lr:1.00E-03

Epoch:16, Train_acc:95.6%,Train_loss:0.136,Test_acc:78.9%,Test_loss:0.452,Lr:1.00E-03

Epoch:17, Train_acc:98.2%,Train_loss:0.098,Test_acc:78.9%,Test_loss:0.516,Lr:1.00E-03

Epoch:18, Train_acc:98.2%,Train_loss:0.094,Test_acc:80.3%,Test_loss:0.428,Lr:1.00E-03

Epoch:19, Train_acc:98.4%,Train_loss:0.089,Test_acc:78.9%,Test_loss:0.432,Lr:1.00E-03

Epoch:20, Train_acc:99.0%,Train_loss:0.081,Test_acc:80.3%,Test_loss:0.391,Lr:1.00E-03

Epoch:21, Train_acc:99.8%,Train_loss:0.068,Test_acc:78.9%,Test_loss:0.437,Lr:1.00E-03

Epoch:22, Train_acc:99.6%,Train_loss:0.073,Test_acc:80.3%,Test_loss:0.499,Lr:1.00E-03

Epoch:23, Train_acc:99.4%,Train_loss:0.065,Test_acc:80.3%,Test_loss:0.448,Lr:1.00E-03

Epoch:24, Train_acc:100.0%,Train_loss:0.053,Test_acc:80.3%,Test_loss:0.388,Lr:1.00E-03

Epoch:25, Train_acc:99.8%,Train_loss:0.056,Test_acc:82.9%,Test_loss:0.374,Lr:1.00E-03

Epoch:26, Train_acc:100.0%,Train_loss:0.047,Test_acc:78.9%,Test_loss:0.403,Lr:1.00E-03

Epoch:27, Train_acc:99.8%,Train_loss:0.045,Test_acc:82.9%,Test_loss:0.491,Lr:1.00E-03

Epoch:28, Train_acc:100.0%,Train_loss:0.043,Test_acc:81.6%,Test_loss:0.473,Lr:1.00E-03

Epoch:29, Train_acc:99.8%,Train_loss:0.041,Test_acc:84.2%,Test_loss:0.437,Lr:1.00E-03

Epoch:30, Train_acc:100.0%,Train_loss:0.039,Test_acc:80.3%,Test_loss:0.443,Lr:1.00E-03

Epoch:31, Train_acc:99.6%,Train_loss:0.038,Test_acc:82.9%,Test_loss:0.465,Lr:1.00E-03

Epoch:32, Train_acc:100.0%,Train_loss:0.033,Test_acc:82.9%,Test_loss:0.452,Lr:1.00E-03

Epoch:33, Train_acc:100.0%,Train_loss:0.032,Test_acc:82.9%,Test_loss:0.453,Lr:1.00E-03

Epoch:34, Train_acc:100.0%,Train_loss:0.030,Test_acc:81.6%,Test_loss:0.653,Lr:1.00E-03

Epoch:35, Train_acc:100.0%,Train_loss:0.031,Test_acc:80.3%,Test_loss:0.568,Lr:1.00E-03

Epoch:36, Train_acc:99.8%,Train_loss:0.030,Test_acc:80.3%,Test_loss:0.403,Lr:1.00E-03

Epoch:37, Train_acc:100.0%,Train_loss:0.028,Test_acc:82.9%,Test_loss:0.459,Lr:1.00E-03

Epoch:38, Train_acc:100.0%,Train_loss:0.024,Test_acc:82.9%,Test_loss:0.432,Lr:1.00E-03

Epoch:39, Train_acc:99.8%,Train_loss:0.027,Test_acc:81.6%,Test_loss:0.382,Lr:1.00E-03

Epoch:40, Train_acc:100.0%,Train_loss:0.023,Test_acc:82.9%,Test_loss:0.456,Lr:1.00E-03

Done

1e-4

Epoch: 1, Train_acc:53.4%,Train_loss:0.747,Test_acc:47.4%,Test_loss:0.695,Lr:1.00E-04

Epoch: 2, Train_acc:56.0%,Train_loss:0.725,Test_acc:60.5%,Test_loss:0.668,Lr:1.00E-04

Epoch: 3, Train_acc:66.1%,Train_loss:0.617,Test_acc:64.5%,Test_loss:0.646,Lr:1.00E-04

Epoch: 4, Train_acc:66.5%,Train_loss:0.603,Test_acc:61.8%,Test_loss:0.633,Lr:1.00E-04

Epoch: 5, Train_acc:75.7%,Train_loss:0.530,Test_acc:63.2%,Test_loss:0.598,Lr:1.00E-04

Epoch: 6, Train_acc:74.3%,Train_loss:0.529,Test_acc:63.2%,Test_loss:0.600,Lr:1.00E-04

Epoch: 7, Train_acc:76.9%,Train_loss:0.499,Test_acc:65.8%,Test_loss:0.576,Lr:1.00E-04

Epoch: 8, Train_acc:78.1%,Train_loss:0.474,Test_acc:69.7%,Test_loss:0.536,Lr:1.00E-04

Epoch: 9, Train_acc:82.3%,Train_loss:0.442,Test_acc:60.5%,Test_loss:0.724,Lr:1.00E-04

Epoch:10, Train_acc:82.3%,Train_loss:0.440,Test_acc:67.1%,Test_loss:0.618,Lr:1.00E-04

Epoch:11, Train_acc:82.9%,Train_loss:0.418,Test_acc:68.4%,Test_loss:0.549,Lr:1.00E-04

Epoch:12, Train_acc:87.3%,Train_loss:0.384,Test_acc:71.1%,Test_loss:0.539,Lr:1.00E-04

Epoch:13, Train_acc:86.9%,Train_loss:0.375,Test_acc:69.7%,Test_loss:0.517,Lr:1.00E-04

Epoch:14, Train_acc:89.2%,Train_loss:0.354,Test_acc:72.4%,Test_loss:0.514,Lr:1.00E-04

Epoch:15, Train_acc:90.0%,Train_loss:0.346,Test_acc:67.1%,Test_loss:0.529,Lr:1.00E-04

Epoch:16, Train_acc:89.8%,Train_loss:0.341,Test_acc:69.7%,Test_loss:0.542,Lr:1.00E-04

Epoch:17, Train_acc:90.4%,Train_loss:0.328,Test_acc:71.1%,Test_loss:0.561,Lr:1.00E-04

Epoch:18, Train_acc:89.6%,Train_loss:0.319,Test_acc:69.7%,Test_loss:0.561,Lr:1.00E-04

Epoch:19, Train_acc:91.6%,Train_loss:0.310,Test_acc:71.1%,Test_loss:0.499,Lr:1.00E-04

Epoch:20, Train_acc:93.4%,Train_loss:0.292,Test_acc:69.7%,Test_loss:0.553,Lr:1.00E-04

Epoch:21, Train_acc:91.8%,Train_loss:0.292,Test_acc:69.7%,Test_loss:0.546,Lr:1.00E-04

Epoch:22, Train_acc:93.2%,Train_loss:0.276,Test_acc:72.4%,Test_loss:0.548,Lr:1.00E-04

Epoch:23, Train_acc:94.0%,Train_loss:0.273,Test_acc:71.1%,Test_loss:0.539,Lr:1.00E-04

Epoch:24, Train_acc:94.2%,Train_loss:0.266,Test_acc:71.1%,Test_loss:0.526,Lr:1.00E-04

Epoch:25, Train_acc:95.2%,Train_loss:0.257,Test_acc:72.4%,Test_loss:0.514,Lr:1.00E-04

Epoch:26, Train_acc:94.8%,Train_loss:0.258,Test_acc:71.1%,Test_loss:0.466,Lr:1.00E-04

Epoch:27, Train_acc:96.6%,Train_loss:0.233,Test_acc:72.4%,Test_loss:0.520,Lr:1.00E-04

Epoch:28, Train_acc:95.8%,Train_loss:0.240,Test_acc:69.7%,Test_loss:0.513,Lr:1.00E-04

Epoch:29, Train_acc:96.6%,Train_loss:0.238,Test_acc:69.7%,Test_loss:0.485,Lr:1.00E-04

Epoch:30, Train_acc:96.6%,Train_loss:0.217,Test_acc:69.7%,Test_loss:0.531,Lr:1.00E-04

Epoch:31, Train_acc:96.4%,Train_loss:0.228,Test_acc:72.4%,Test_loss:0.491,Lr:1.00E-04

Epoch:32, Train_acc:97.4%,Train_loss:0.216,Test_acc:73.7%,Test_loss:0.533,Lr:1.00E-04

Epoch:33, Train_acc:97.4%,Train_loss:0.212,Test_acc:73.7%,Test_loss:0.483,Lr:1.00E-04

Epoch:34, Train_acc:97.2%,Train_loss:0.207,Test_acc:71.1%,Test_loss:0.536,Lr:1.00E-04

Epoch:35, Train_acc:96.8%,Train_loss:0.210,Test_acc:69.7%,Test_loss:0.512,Lr:1.00E-04

Epoch:36, Train_acc:97.6%,Train_loss:0.192,Test_acc:72.4%,Test_loss:0.471,Lr:1.00E-04

Epoch:37, Train_acc:98.4%,Train_loss:0.182,Test_acc:72.4%,Test_loss:0.488,Lr:1.00E-04

Epoch:38, Train_acc:97.6%,Train_loss:0.182,Test_acc:71.1%,Test_loss:0.531,Lr:1.00E-04

Epoch:39, Train_acc:99.2%,Train_loss:0.182,Test_acc:72.4%,Test_loss:0.481,Lr:1.00E-04

Epoch:40, Train_acc:98.2%,Train_loss:0.176,Test_acc:73.7%,Test_loss:0.581,Lr:1.00E-04

Done

11.2 动态学习率

1e-1

Epoch: 1, Train_acc:49.8%,Train_loss:15.757,Test_acc:50.0%,Test_loss:20.521,Lr:1.00E-01

Epoch: 2, Train_acc:47.2%,Train_loss:0.694,Test_acc:50.0%,Test_loss:0.693,Lr:1.00E-01

Epoch: 3, Train_acc:48.8%,Train_loss:0.694,Test_acc:50.0%,Test_loss:0.693,Lr:9.20E-02

Epoch: 4, Train_acc:48.8%,Train_loss:0.694,Test_acc:50.0%,Test_loss:0.693,Lr:9.20E-02

Epoch: 5, Train_acc:48.8%,Train_loss:0.694,Test_acc:50.0%,Test_loss:0.693,Lr:8.46E-02

Epoch: 6, Train_acc:48.8%,Train_loss:0.694,Test_acc:50.0%,Test_loss:0.693,Lr:8.46E-02

Epoch: 7, Train_acc:46.4%,Train_loss:0.694,Test_acc:50.0%,Test_loss:0.693,Lr:7.79E-02

Epoch: 8, Train_acc:45.6%,Train_loss:0.694,Test_acc:50.0%,Test_loss:0.693,Lr:7.79E-02

Epoch: 9, Train_acc:49.2%,Train_loss:0.693,Test_acc:50.0%,Test_loss:0.693,Lr:7.16E-02

Epoch:10, Train_acc:47.6%,Train_loss:0.694,Test_acc:50.0%,Test_loss:0.693,Lr:7.16E-02

Epoch:11, Train_acc:46.4%,Train_loss:0.694,Test_acc:50.0%,Test_loss:0.693,Lr:6.59E-02

Epoch:12, Train_acc:47.6%,Train_loss:0.694,Test_acc:50.0%,Test_loss:0.693,Lr:6.59E-02

Epoch:13, Train_acc:47.2%,Train_loss:0.693,Test_acc:50.0%,Test_loss:0.693,Lr:6.06E-02

Epoch:14, Train_acc:50.0%,Train_loss:0.694,Test_acc:50.0%,Test_loss:0.693,Lr:6.06E-02

Epoch:15, Train_acc:48.8%,Train_loss:0.693,Test_acc:50.0%,Test_loss:0.693,Lr:5.58E-02

Epoch:16, Train_acc:45.2%,Train_loss:0.694,Test_acc:50.0%,Test_loss:0.693,Lr:5.58E-02

Epoch:17, Train_acc:50.0%,Train_loss:0.693,Test_acc:50.0%,Test_loss:0.693,Lr:5.13E-02

Epoch:18, Train_acc:50.0%,Train_loss:0.693,Test_acc:50.0%,Test_loss:0.693,Lr:5.13E-02

Epoch:19, Train_acc:46.8%,Train_loss:0.694,Test_acc:50.0%,Test_loss:0.693,Lr:4.72E-02

Epoch:20, Train_acc:46.0%,Train_loss:0.694,Test_acc:50.0%,Test_loss:0.693,Lr:4.72E-02

Epoch:21, Train_acc:50.0%,Train_loss:0.693,Test_acc:50.0%,Test_loss:0.693,Lr:4.34E-02

Epoch:22, Train_acc:48.0%,Train_loss:0.694,Test_acc:50.0%,Test_loss:0.693,Lr:4.34E-02

Epoch:23, Train_acc:49.2%,Train_loss:0.693,Test_acc:50.0%,Test_loss:0.693,Lr:4.00E-02

Epoch:24, Train_acc:47.2%,Train_loss:0.693,Test_acc:50.0%,Test_loss:0.693,Lr:4.00E-02

Epoch:25, Train_acc:48.0%,Train_loss:0.694,Test_acc:50.0%,Test_loss:0.693,Lr:3.68E-02

Epoch:26, Train_acc:46.4%,Train_loss:0.693,Test_acc:50.0%,Test_loss:0.693,Lr:3.68E-02

Epoch:27, Train_acc:48.8%,Train_loss:0.693,Test_acc:50.0%,Test_loss:0.693,Lr:3.38E-02

Epoch:28, Train_acc:48.8%,Train_loss:0.693,Test_acc:50.0%,Test_loss:0.693,Lr:3.38E-02

Epoch:29, Train_acc:50.0%,Train_loss:0.693,Test_acc:50.0%,Test_loss:0.693,Lr:3.11E-02

Epoch:30, Train_acc:47.2%,Train_loss:0.694,Test_acc:50.0%,Test_loss:0.693,Lr:3.11E-02

Epoch:31, Train_acc:49.2%,Train_loss:0.693,Test_acc:50.0%,Test_loss:0.693,Lr:2.86E-02

Epoch:32, Train_acc:48.4%,Train_loss:0.693,Test_acc:50.0%,Test_loss:0.693,Lr:2.86E-02

Epoch:33, Train_acc:46.0%,Train_loss:0.693,Test_acc:50.0%,Test_loss:0.693,Lr:2.63E-02

Epoch:34, Train_acc:46.4%,Train_loss:0.693,Test_acc:50.0%,Test_loss:0.693,Lr:2.63E-02

Epoch:35, Train_acc:50.0%,Train_loss:0.693,Test_acc:50.0%,Test_loss:0.693,Lr:2.42E-02

Epoch:36, Train_acc:46.0%,Train_loss:0.693,Test_acc:50.0%,Test_loss:0.693,Lr:2.42E-02

Epoch:37, Train_acc:46.4%,Train_loss:0.693,Test_acc:50.0%,Test_loss:0.693,Lr:2.23E-02

Epoch:38, Train_acc:45.6%,Train_loss:0.693,Test_acc:50.0%,Test_loss:0.693,Lr:2.23E-02

Epoch:39, Train_acc:49.2%,Train_loss:0.693,Test_acc:50.0%,Test_loss:0.693,Lr:2.05E-02

Epoch:40, Train_acc:50.0%,Train_loss:0.693,Test_acc:50.0%,Test_loss:0.693,Lr:2.05E-02

Done

1e-2

Epoch: 1, Train_acc:47.6%,Train_loss:13.706,Test_acc:48.7%,Test_loss:6.212,Lr:1.00E-02

Epoch: 2, Train_acc:52.0%,Train_loss:2.729,Test_acc:50.0%,Test_loss:1.496,Lr:1.00E-02

Epoch: 3, Train_acc:65.9%,Train_loss:1.144,Test_acc:65.8%,Test_loss:0.933,Lr:9.20E-03

Epoch: 4, Train_acc:69.1%,Train_loss:0.876,Test_acc:53.9%,Test_loss:1.445,Lr:9.20E-03

Epoch: 5, Train_acc:72.7%,Train_loss:0.722,Test_acc:64.5%,Test_loss:0.757,Lr:8.46E-03

Epoch: 6, Train_acc:79.7%,Train_loss:0.488,Test_acc:72.4%,Test_loss:0.614,Lr:8.46E-03

Epoch: 7, Train_acc:83.9%,Train_loss:0.396,Test_acc:75.0%,Test_loss:0.787,Lr:7.79E-03

Epoch: 8, Train_acc:83.7%,Train_loss:0.446,Test_acc:71.1%,Test_loss:0.643,Lr:7.79E-03

Epoch: 9, Train_acc:83.3%,Train_loss:0.356,Test_acc:73.7%,Test_loss:0.769,Lr:7.16E-03

Epoch:10, Train_acc:86.1%,Train_loss:0.325,Test_acc:71.1%,Test_loss:1.028,Lr:7.16E-03

Epoch:11, Train_acc:88.6%,Train_loss:0.284,Test_acc:73.7%,Test_loss:0.710,Lr:6.59E-03

Epoch:12, Train_acc:91.0%,Train_loss:0.266,Test_acc:67.1%,Test_loss:1.325,Lr:6.59E-03

Epoch:13, Train_acc:88.4%,Train_loss:0.267,Test_acc:76.3%,Test_loss:0.659,Lr:6.06E-03

Epoch:14, Train_acc:90.6%,Train_loss:0.229,Test_acc:76.3%,Test_loss:0.628,Lr:6.06E-03

Epoch:15, Train_acc:90.8%,Train_loss:0.237,Test_acc:73.7%,Test_loss:0.858,Lr:5.58E-03

Epoch:16, Train_acc:91.4%,Train_loss:0.222,Test_acc:75.0%,Test_loss:0.951,Lr:5.58E-03

Epoch:17, Train_acc:92.8%,Train_loss:0.200,Test_acc:78.9%,Test_loss:0.851,Lr:5.13E-03

Epoch:18, Train_acc:93.0%,Train_loss:0.200,Test_acc:72.4%,Test_loss:0.753,Lr:5.13E-03

Epoch:19, Train_acc:92.4%,Train_loss:0.199,Test_acc:78.9%,Test_loss:0.790,Lr:4.72E-03

Epoch:20, Train_acc:94.6%,Train_loss:0.164,Test_acc:80.3%,Test_loss:0.735,Lr:4.72E-03

Epoch:21, Train_acc:93.6%,Train_loss:0.165,Test_acc:77.6%,Test_loss:0.969,Lr:4.34E-03

Epoch:22, Train_acc:93.8%,Train_loss:0.165,Test_acc:72.4%,Test_loss:0.838,Lr:4.34E-03

Epoch:23, Train_acc:95.4%,Train_loss:0.156,Test_acc:71.1%,Test_loss:1.048,Lr:4.00E-03

Epoch:24, Train_acc:95.8%,Train_loss:0.148,Test_acc:78.9%,Test_loss:0.728,Lr:4.00E-03

Epoch:25, Train_acc:97.6%,Train_loss:0.126,Test_acc:77.6%,Test_loss:0.801,Lr:3.68E-03

Epoch:26, Train_acc:96.0%,Train_loss:0.138,Test_acc:77.6%,Test_loss:0.828,Lr:3.68E-03

Epoch:27, Train_acc:96.6%,Train_loss:0.131,Test_acc:77.6%,Test_loss:0.767,Lr:3.38E-03

Epoch:28, Train_acc:96.2%,Train_loss:0.125,Test_acc:77.6%,Test_loss:0.999,Lr:3.38E-03

Epoch:29, Train_acc:96.6%,Train_loss:0.112,Test_acc:78.9%,Test_loss:0.864,Lr:3.11E-03

Epoch:30, Train_acc:97.8%,Train_loss:0.094,Test_acc:78.9%,Test_loss:0.956,Lr:3.11E-03

Epoch:31, Train_acc:96.2%,Train_loss:0.121,Test_acc:77.6%,Test_loss:0.723,Lr:2.86E-03

Epoch:32, Train_acc:97.2%,Train_loss:0.099,Test_acc:77.6%,Test_loss:0.703,Lr:2.86E-03

Epoch:33, Train_acc:97.6%,Train_loss:0.093,Test_acc:78.9%,Test_loss:0.971,Lr:2.63E-03

Epoch:34, Train_acc:97.8%,Train_loss:0.098,Test_acc:78.9%,Test_loss:0.757,Lr:2.63E-03

Epoch:35, Train_acc:98.2%,Train_loss:0.095,Test_acc:78.9%,Test_loss:0.896,Lr:2.42E-03

Epoch:36, Train_acc:98.6%,Train_loss:0.082,Test_acc:78.9%,Test_loss:0.784,Lr:2.42E-03

Epoch:37, Train_acc:98.4%,Train_loss:0.079,Test_acc:78.9%,Test_loss:0.933,Lr:2.23E-03

Epoch:38, Train_acc:98.8%,Train_loss:0.082,Test_acc:77.6%,Test_loss:1.152,Lr:2.23E-03

Epoch:39, Train_acc:98.8%,Train_loss:0.075,Test_acc:78.9%,Test_loss:0.855,Lr:2.05E-03

Epoch:40, Train_acc:98.6%,Train_loss:0.084,Test_acc:78.9%,Test_loss:0.824,Lr:2.05E-03

Done

1e-3

Epoch: 1, Train_acc:53.8%,Train_loss:3.389,Test_acc:51.3%,Test_loss:0.933,Lr:1.00E-03

Epoch: 2, Train_acc:55.8%,Train_loss:2.331,Test_acc:53.9%,Test_loss:1.597,Lr:1.00E-03

Epoch: 3, Train_acc:69.9%,Train_loss:0.969,Test_acc:65.8%,Test_loss:0.796,Lr:9.20E-04

Epoch: 4, Train_acc:62.5%,Train_loss:1.327,Test_acc:71.1%,Test_loss:0.686,Lr:9.20E-04

Epoch: 5, Train_acc:80.9%,Train_loss:0.462,Test_acc:68.4%,Test_loss:1.011,Lr:8.46E-04

Epoch: 6, Train_acc:79.5%,Train_loss:0.522,Test_acc:73.7%,Test_loss:0.564,Lr:8.46E-04

Epoch: 7, Train_acc:85.1%,Train_loss:0.321,Test_acc:76.3%,Test_loss:0.680,Lr:7.79E-04

Epoch: 8, Train_acc:86.9%,Train_loss:0.288,Test_acc:76.3%,Test_loss:0.467,Lr:7.79E-04

Epoch: 9, Train_acc:94.6%,Train_loss:0.173,Test_acc:77.6%,Test_loss:0.425,Lr:7.16E-04

Epoch:10, Train_acc:97.0%,Train_loss:0.134,Test_acc:76.3%,Test_loss:0.439,Lr:7.16E-04

Epoch:11, Train_acc:95.8%,Train_loss:0.136,Test_acc:75.0%,Test_loss:0.400,Lr:6.59E-04

Epoch:12, Train_acc:97.2%,Train_loss:0.117,Test_acc:76.3%,Test_loss:0.450,Lr:6.59E-04

Epoch:13, Train_acc:97.4%,Train_loss:0.113,Test_acc:80.3%,Test_loss:0.448,Lr:6.06E-04

Epoch:14, Train_acc:98.4%,Train_loss:0.104,Test_acc:76.3%,Test_loss:0.379,Lr:6.06E-04

Epoch:15, Train_acc:98.8%,Train_loss:0.096,Test_acc:77.6%,Test_loss:0.441,Lr:5.58E-04

Epoch:16, Train_acc:99.0%,Train_loss:0.090,Test_acc:80.3%,Test_loss:0.428,Lr:5.58E-04

Epoch:17, Train_acc:99.0%,Train_loss:0.090,Test_acc:78.9%,Test_loss:0.367,Lr:5.13E-04

Epoch:18, Train_acc:99.4%,Train_loss:0.084,Test_acc:78.9%,Test_loss:0.429,Lr:5.13E-04

Epoch:19, Train_acc:99.0%,Train_loss:0.079,Test_acc:78.9%,Test_loss:0.403,Lr:4.72E-04

Epoch:20, Train_acc:99.2%,Train_loss:0.076,Test_acc:78.9%,Test_loss:0.341,Lr:4.72E-04

Epoch:21, Train_acc:99.6%,Train_loss:0.073,Test_acc:80.3%,Test_loss:0.392,Lr:4.34E-04

Epoch:22, Train_acc:99.4%,Train_loss:0.073,Test_acc:78.9%,Test_loss:0.424,Lr:4.34E-04

Epoch:23, Train_acc:99.6%,Train_loss:0.067,Test_acc:78.9%,Test_loss:0.409,Lr:4.00E-04

Epoch:24, Train_acc:99.6%,Train_loss:0.067,Test_acc:78.9%,Test_loss:0.407,Lr:4.00E-04

Epoch:25, Train_acc:99.6%,Train_loss:0.061,Test_acc:78.9%,Test_loss:0.431,Lr:3.68E-04

Epoch:26, Train_acc:99.8%,Train_loss:0.063,Test_acc:80.3%,Test_loss:0.376,Lr:3.68E-04

Epoch:27, Train_acc:99.8%,Train_loss:0.065,Test_acc:81.6%,Test_loss:0.397,Lr:3.38E-04

Epoch:28, Train_acc:99.6%,Train_loss:0.060,Test_acc:80.3%,Test_loss:0.446,Lr:3.38E-04

Epoch:29, Train_acc:99.8%,Train_loss:0.057,Test_acc:81.6%,Test_loss:0.378,Lr:3.11E-04

Epoch:30, Train_acc:99.8%,Train_loss:0.058,Test_acc:81.6%,Test_loss:0.391,Lr:3.11E-04

Epoch:31, Train_acc:99.6%,Train_loss:0.057,Test_acc:80.3%,Test_loss:0.380,Lr:2.86E-04

Epoch:32, Train_acc:99.8%,Train_loss:0.056,Test_acc:78.9%,Test_loss:0.353,Lr:2.86E-04

Epoch:33, Train_acc:99.6%,Train_loss:0.059,Test_acc:80.3%,Test_loss:0.438,Lr:2.63E-04

Epoch:34, Train_acc:99.8%,Train_loss:0.051,Test_acc:81.6%,Test_loss:0.420,Lr:2.63E-04

Epoch:35, Train_acc:99.6%,Train_loss:0.055,Test_acc:81.6%,Test_loss:0.368,Lr:2.42E-04

Epoch:36, Train_acc:100.0%,Train_loss:0.053,Test_acc:81.6%,Test_loss:0.417,Lr:2.42E-04

Epoch:37, Train_acc:100.0%,Train_loss:0.050,Test_acc:81.6%,Test_loss:0.412,Lr:2.23E-04

Epoch:38, Train_acc:100.0%,Train_loss:0.052,Test_acc:78.9%,Test_loss:0.366,Lr:2.23E-04

Epoch:39, Train_acc:100.0%,Train_loss:0.051,Test_acc:78.9%,Test_loss:0.349,Lr:2.05E-04

Epoch:40, Train_acc:100.0%,Train_loss:0.051,Test_acc:78.9%,Test_loss:0.427,Lr:2.05E-04

Done

1e-4

Epoch: 1, Train_acc:55.8%,Train_loss:0.747,Test_acc:50.0%,Test_loss:0.706,Lr:1.00E-04

Epoch: 2, Train_acc:62.4%,Train_loss:0.666,Test_acc:60.5%,Test_loss:0.670,Lr:1.00E-04

Epoch: 3, Train_acc:67.5%,Train_loss:0.611,Test_acc:67.1%,Test_loss:0.619,Lr:9.20E-05

Epoch: 4, Train_acc:69.7%,Train_loss:0.580,Test_acc:65.8%,Test_loss:0.628,Lr:9.20E-05

Epoch: 5, Train_acc:73.5%,Train_loss:0.530,Test_acc:64.5%,Test_loss:0.557,Lr:8.46E-05

Epoch: 6, Train_acc:73.5%,Train_loss:0.528,Test_acc:67.1%,Test_loss:0.566,Lr:8.46E-05

Epoch: 7, Train_acc:80.1%,Train_loss:0.469,Test_acc:68.4%,Test_loss:0.571,Lr:7.79E-05

Epoch: 8, Train_acc:78.3%,Train_loss:0.481,Test_acc:68.4%,Test_loss:0.551,Lr:7.79E-05

Epoch: 9, Train_acc:82.5%,Train_loss:0.449,Test_acc:69.7%,Test_loss:0.502,Lr:7.16E-05

Epoch:10, Train_acc:81.3%,Train_loss:0.443,Test_acc:68.4%,Test_loss:0.514,Lr:7.16E-05

Epoch:11, Train_acc:87.5%,Train_loss:0.412,Test_acc:72.4%,Test_loss:0.489,Lr:6.59E-05

Epoch:12, Train_acc:84.9%,Train_loss:0.417,Test_acc:68.4%,Test_loss:0.496,Lr:6.59E-05

Epoch:13, Train_acc:86.1%,Train_loss:0.395,Test_acc:72.4%,Test_loss:0.538,Lr:6.06E-05

Epoch:14, Train_acc:87.1%,Train_loss:0.374,Test_acc:71.1%,Test_loss:0.512,Lr:6.06E-05

Epoch:15, Train_acc:86.9%,Train_loss:0.371,Test_acc:72.4%,Test_loss:0.529,Lr:5.58E-05

Epoch:16, Train_acc:86.9%,Train_loss:0.377,Test_acc:72.4%,Test_loss:0.522,Lr:5.58E-05

Epoch:17, Train_acc:89.2%,Train_loss:0.347,Test_acc:72.4%,Test_loss:0.500,Lr:5.13E-05

Epoch:18, Train_acc:89.8%,Train_loss:0.341,Test_acc:69.7%,Test_loss:0.503,Lr:5.13E-05

Epoch:19, Train_acc:90.4%,Train_loss:0.334,Test_acc:72.4%,Test_loss:0.428,Lr:4.72E-05

Epoch:20, Train_acc:91.0%,Train_loss:0.330,Test_acc:77.6%,Test_loss:0.483,Lr:4.72E-05

Epoch:21, Train_acc:91.4%,Train_loss:0.322,Test_acc:72.4%,Test_loss:0.466,Lr:4.34E-05

Epoch:22, Train_acc:92.0%,Train_loss:0.327,Test_acc:75.0%,Test_loss:0.437,Lr:4.34E-05

Epoch:23, Train_acc:91.0%,Train_loss:0.314,Test_acc:75.0%,Test_loss:0.461,Lr:4.00E-05

Epoch:24, Train_acc:92.0%,Train_loss:0.313,Test_acc:75.0%,Test_loss:0.485,Lr:4.00E-05

Epoch:25, Train_acc:91.0%,Train_loss:0.301,Test_acc:75.0%,Test_loss:0.451,Lr:3.68E-05

Epoch:26, Train_acc:92.8%,Train_loss:0.293,Test_acc:73.7%,Test_loss:0.505,Lr:3.68E-05

Epoch:27, Train_acc:93.6%,Train_loss:0.300,Test_acc:73.7%,Test_loss:0.441,Lr:3.38E-05

Epoch:28, Train_acc:94.2%,Train_loss:0.291,Test_acc:75.0%,Test_loss:0.470,Lr:3.38E-05

Epoch:29, Train_acc:92.8%,Train_loss:0.293,Test_acc:76.3%,Test_loss:0.437,Lr:3.11E-05

Epoch:30, Train_acc:92.6%,Train_loss:0.289,Test_acc:75.0%,Test_loss:0.465,Lr:3.11E-05

Epoch:31, Train_acc:92.8%,Train_loss:0.292,Test_acc:75.0%,Test_loss:0.446,Lr:2.86E-05

Epoch:32, Train_acc:94.0%,Train_loss:0.277,Test_acc:75.0%,Test_loss:0.466,Lr:2.86E-05

Epoch:33, Train_acc:92.6%,Train_loss:0.281,Test_acc:75.0%,Test_loss:0.483,Lr:2.63E-05

Epoch:34, Train_acc:93.8%,Train_loss:0.287,Test_acc:75.0%,Test_loss:0.467,Lr:2.63E-05

Epoch:35, Train_acc:93.8%,Train_loss:0.283,Test_acc:76.3%,Test_loss:0.454,Lr:2.42E-05

Epoch:36, Train_acc:94.6%,Train_loss:0.273,Test_acc:76.3%,Test_loss:0.447,Lr:2.42E-05

Epoch:37, Train_acc:94.0%,Train_loss:0.278,Test_acc:76.3%,Test_loss:0.454,Lr:2.23E-05

Epoch:38, Train_acc:96.2%,Train_loss:0.264,Test_acc:75.0%,Test_loss:0.464,Lr:2.23E-05

Epoch:39, Train_acc:93.6%,Train_loss:0.260,Test_acc:76.3%,Test_loss:0.451,Lr:2.05E-05

Epoch:40, Train_acc:96.4%,Train_loss:0.258,Test_acc:76.3%,Test_loss:0.411,Lr:2.05E-05

Done

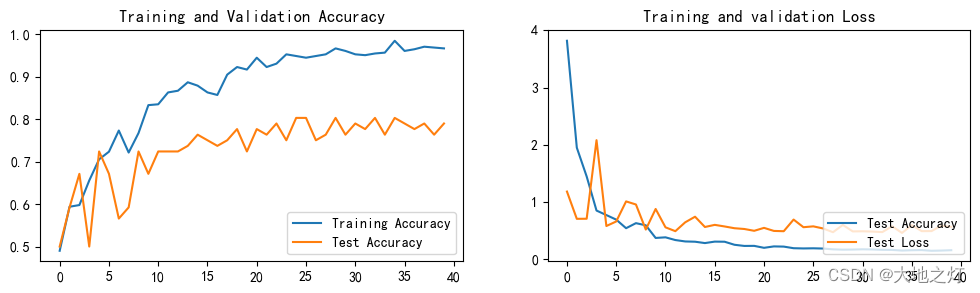

训练集水平翻转 + 动态学习率

1e-3

Epoch: 1, Train_acc:49.0%,Train_loss:3.819,Test_acc:50.0%,Test_loss:1.180,Lr:1.00E-03

Epoch: 2, Train_acc:59.4%,Train_loss:1.948,Test_acc:59.2%,Test_loss:0.703,Lr:1.00E-03

Epoch: 3, Train_acc:59.8%,Train_loss:1.444,Test_acc:67.1%,Test_loss:0.705,Lr:9.20E-04

Epoch: 4, Train_acc:65.5%,Train_loss:0.848,Test_acc:50.0%,Test_loss:2.079,Lr:9.20E-04

Epoch: 5, Train_acc:70.5%,Train_loss:0.769,Test_acc:72.4%,Test_loss:0.577,Lr:8.46E-04

Epoch: 6, Train_acc:72.3%,Train_loss:0.689,Test_acc:67.1%,Test_loss:0.657,Lr:8.46E-04

Epoch: 7, Train_acc:77.3%,Train_loss:0.539,Test_acc:56.6%,Test_loss:1.008,Lr:7.79E-04

Epoch: 8, Train_acc:72.1%,Train_loss:0.627,Test_acc:59.2%,Test_loss:0.954,Lr:7.79E-04

Epoch: 9, Train_acc:76.7%,Train_loss:0.590,Test_acc:72.4%,Test_loss:0.515,Lr:7.16E-04

Epoch:10, Train_acc:83.3%,Train_loss:0.368,Test_acc:67.1%,Test_loss:0.875,Lr:7.16E-04

Epoch:11, Train_acc:83.5%,Train_loss:0.379,Test_acc:72.4%,Test_loss:0.554,Lr:6.59E-04

Epoch:12, Train_acc:86.3%,Train_loss:0.332,Test_acc:72.4%,Test_loss:0.487,Lr:6.59E-04

Epoch:13, Train_acc:86.7%,Train_loss:0.307,Test_acc:72.4%,Test_loss:0.642,Lr:6.06E-04

Epoch:14, Train_acc:88.6%,Train_loss:0.302,Test_acc:73.7%,Test_loss:0.740,Lr:6.06E-04

Epoch:15, Train_acc:87.8%,Train_loss:0.278,Test_acc:76.3%,Test_loss:0.560,Lr:5.58E-04

Epoch:16, Train_acc:86.3%,Train_loss:0.304,Test_acc:75.0%,Test_loss:0.597,Lr:5.58E-04

Epoch:17, Train_acc:85.7%,Train_loss:0.302,Test_acc:73.7%,Test_loss:0.569,Lr:5.13E-04

Epoch:18, Train_acc:90.4%,Train_loss:0.249,Test_acc:75.0%,Test_loss:0.538,Lr:5.13E-04

Epoch:19, Train_acc:92.2%,Train_loss:0.229,Test_acc:77.6%,Test_loss:0.525,Lr:4.72E-04

Epoch:20, Train_acc:91.6%,Train_loss:0.230,Test_acc:72.4%,Test_loss:0.493,Lr:4.72E-04

Epoch:21, Train_acc:94.4%,Train_loss:0.196,Test_acc:77.6%,Test_loss:0.545,Lr:4.34E-04

Epoch:22, Train_acc:92.2%,Train_loss:0.220,Test_acc:76.3%,Test_loss:0.491,Lr:4.34E-04

Epoch:23, Train_acc:93.0%,Train_loss:0.217,Test_acc:78.9%,Test_loss:0.486,Lr:4.00E-04

Epoch:24, Train_acc:95.2%,Train_loss:0.189,Test_acc:75.0%,Test_loss:0.690,Lr:4.00E-04

Epoch:25, Train_acc:94.8%,Train_loss:0.185,Test_acc:80.3%,Test_loss:0.556,Lr:3.68E-04

Epoch:26, Train_acc:94.4%,Train_loss:0.187,Test_acc:80.3%,Test_loss:0.571,Lr:3.68E-04

Epoch:27, Train_acc:94.8%,Train_loss:0.183,Test_acc:75.0%,Test_loss:0.534,Lr:3.38E-04

Epoch:28, Train_acc:95.2%,Train_loss:0.169,Test_acc:76.3%,Test_loss:0.469,Lr:3.38E-04

Epoch:29, Train_acc:96.6%,Train_loss:0.163,Test_acc:80.3%,Test_loss:0.596,Lr:3.11E-04

Epoch:30, Train_acc:96.0%,Train_loss:0.165,Test_acc:76.3%,Test_loss:0.483,Lr:3.11E-04

Epoch:31, Train_acc:95.2%,Train_loss:0.170,Test_acc:78.9%,Test_loss:0.485,Lr:2.86E-04

Epoch:32, Train_acc:95.0%,Train_loss:0.167,Test_acc:77.6%,Test_loss:0.480,Lr:2.86E-04

Epoch:33, Train_acc:95.4%,Train_loss:0.161,Test_acc:80.3%,Test_loss:0.471,Lr:2.63E-04

Epoch:34, Train_acc:95.6%,Train_loss:0.159,Test_acc:76.3%,Test_loss:0.572,Lr:2.63E-04

Epoch:35, Train_acc:98.4%,Train_loss:0.148,Test_acc:80.3%,Test_loss:0.456,Lr:2.42E-04

Epoch:36, Train_acc:96.0%,Train_loss:0.153,Test_acc:78.9%,Test_loss:0.592,Lr:2.42E-04

Epoch:37, Train_acc:96.4%,Train_loss:0.156,Test_acc:77.6%,Test_loss:0.487,Lr:2.23E-04

Epoch:38, Train_acc:97.0%,Train_loss:0.144,Test_acc:78.9%,Test_loss:0.488,Lr:2.23E-04

Epoch:39, Train_acc:96.8%,Train_loss:0.148,Test_acc:76.3%,Test_loss:0.584,Lr:2.05E-04

Epoch:40, Train_acc:96.6%,Train_loss:0.154,Test_acc:78.9%,Test_loss:0.562,Lr:2.05E-04

Done

1e-4

Epoch: 1, Train_acc:54.8%,Train_loss:0.790,Test_acc:61.8%,Test_loss:0.672,Lr:1.00E-04

Epoch: 2, Train_acc:61.0%,Train_loss:0.668,Test_acc:65.8%,Test_loss:0.660,Lr:1.00E-04

Epoch: 3, Train_acc:63.5%,Train_loss:0.654,Test_acc:65.8%,Test_loss:0.583,Lr:9.20E-05

Epoch: 4, Train_acc:65.5%,Train_loss:0.627,Test_acc:67.1%,Test_loss:0.583,Lr:9.20E-05

Epoch: 5, Train_acc:67.1%,Train_loss:0.594,Test_acc:67.1%,Test_loss:0.565,Lr:8.46E-05

Epoch: 6, Train_acc:70.7%,Train_loss:0.586,Test_acc:73.7%,Test_loss:0.556,Lr:8.46E-05

Epoch: 7, Train_acc:71.5%,Train_loss:0.578,Test_acc:71.1%,Test_loss:0.532,Lr:7.79E-05

Epoch: 8, Train_acc:73.7%,Train_loss:0.541,Test_acc:69.7%,Test_loss:0.599,Lr:7.79E-05

Epoch: 9, Train_acc:74.3%,Train_loss:0.540,Test_acc:71.1%,Test_loss:0.529,Lr:7.16E-05

Epoch:10, Train_acc:72.9%,Train_loss:0.521,Test_acc:72.4%,Test_loss:0.516,Lr:7.16E-05

Epoch:11, Train_acc:76.5%,Train_loss:0.497,Test_acc:75.0%,Test_loss:0.523,Lr:6.59E-05

Epoch:12, Train_acc:75.7%,Train_loss:0.505,Test_acc:76.3%,Test_loss:0.530,Lr:6.59E-05

Epoch:13, Train_acc:78.1%,Train_loss:0.487,Test_acc:75.0%,Test_loss:0.491,Lr:6.06E-05

Epoch:14, Train_acc:79.5%,Train_loss:0.472,Test_acc:77.6%,Test_loss:0.529,Lr:6.06E-05

Epoch:15, Train_acc:76.7%,Train_loss:0.489,Test_acc:78.9%,Test_loss:0.484,Lr:5.58E-05

Epoch:16, Train_acc:79.3%,Train_loss:0.467,Test_acc:80.3%,Test_loss:0.520,Lr:5.58E-05

Epoch:17, Train_acc:80.5%,Train_loss:0.455,Test_acc:81.6%,Test_loss:0.527,Lr:5.13E-05

Epoch:18, Train_acc:81.5%,Train_loss:0.447,Test_acc:77.6%,Test_loss:0.463,Lr:5.13E-05

Epoch:19, Train_acc:81.5%,Train_loss:0.441,Test_acc:81.6%,Test_loss:0.471,Lr:4.72E-05

Epoch:20, Train_acc:83.3%,Train_loss:0.425,Test_acc:78.9%,Test_loss:0.469,Lr:4.72E-05

Epoch:21, Train_acc:79.7%,Train_loss:0.441,Test_acc:81.6%,Test_loss:0.492,Lr:4.34E-05

Epoch:22, Train_acc:80.7%,Train_loss:0.447,Test_acc:80.3%,Test_loss:0.474,Lr:4.34E-05

Epoch:23, Train_acc:84.5%,Train_loss:0.410,Test_acc:80.3%,Test_loss:0.485,Lr:4.00E-05

Epoch:24, Train_acc:82.5%,Train_loss:0.422,Test_acc:81.6%,Test_loss:0.469,Lr:4.00E-05

Epoch:25, Train_acc:81.3%,Train_loss:0.439,Test_acc:81.6%,Test_loss:0.460,Lr:3.68E-05

Epoch:26, Train_acc:83.1%,Train_loss:0.405,Test_acc:81.6%,Test_loss:0.488,Lr:3.68E-05

Epoch:27, Train_acc:85.5%,Train_loss:0.411,Test_acc:80.3%,Test_loss:0.476,Lr:3.38E-05

Epoch:28, Train_acc:83.7%,Train_loss:0.414,Test_acc:78.9%,Test_loss:0.462,Lr:3.38E-05

Epoch:29, Train_acc:84.7%,Train_loss:0.398,Test_acc:81.6%,Test_loss:0.465,Lr:3.11E-05

Epoch:30, Train_acc:86.1%,Train_loss:0.405,Test_acc:81.6%,Test_loss:0.478,Lr:3.11E-05

Epoch:31, Train_acc:85.5%,Train_loss:0.396,Test_acc:81.6%,Test_loss:0.447,Lr:2.86E-05

Epoch:32, Train_acc:85.3%,Train_loss:0.402,Test_acc:81.6%,Test_loss:0.451,Lr:2.86E-05

Epoch:33, Train_acc:85.3%,Train_loss:0.387,Test_acc:80.3%,Test_loss:0.421,Lr:2.63E-05

Epoch:34, Train_acc:85.7%,Train_loss:0.382,Test_acc:81.6%,Test_loss:0.466,Lr:2.63E-05

Epoch:35, Train_acc:85.5%,Train_loss:0.390,Test_acc:81.6%,Test_loss:0.500,Lr:2.42E-05

Epoch:36, Train_acc:85.7%,Train_loss:0.383,Test_acc:81.6%,Test_loss:0.469,Lr:2.42E-05

Epoch:37, Train_acc:86.1%,Train_loss:0.378,Test_acc:80.3%,Test_loss:0.466,Lr:2.23E-05

Epoch:38, Train_acc:84.3%,Train_loss:0.396,Test_acc:81.6%,Test_loss:0.456,Lr:2.23E-05

Epoch:39, Train_acc:88.0%,Train_loss:0.360,Test_acc:81.6%,Test_loss:0.484,Lr:2.05E-05

Epoch:40, Train_acc:87.1%,Train_loss:0.373,Test_acc:81.6%,Test_loss:0.478,Lr:2.05E-05

Done